a 2-minute read

Even the best of things come to an end – and it’s the same for A/B testing. Knowing when to end an A/B test is crucial for reliable results: too soon, and you won’t gather enough data; too late, and you might have missed your chance to act.

A/B testing is a vital part of our proprietary IIEA Framework, so we eat, dream, and breathe reliable A/B tests. If you don’t know already, this method of testing, also known as split testing, is when you have two or three slightly different versions of the same thing, perhaps a landing page or social media ad, and test them against one another. From this, you can work out which version is best and identify further areas for optimization. The trick is to only have minor differences, so you can precisely pinpoint which causes the difference in results.

A/B tests are vital for any sort of experimentation and can provide you with a wealth of valuable data and information to inform your business decisions moving forward. Timing is everything – so let’s work out how to hit the spot with A/B tests every time.

The Number One Trap to Avoid

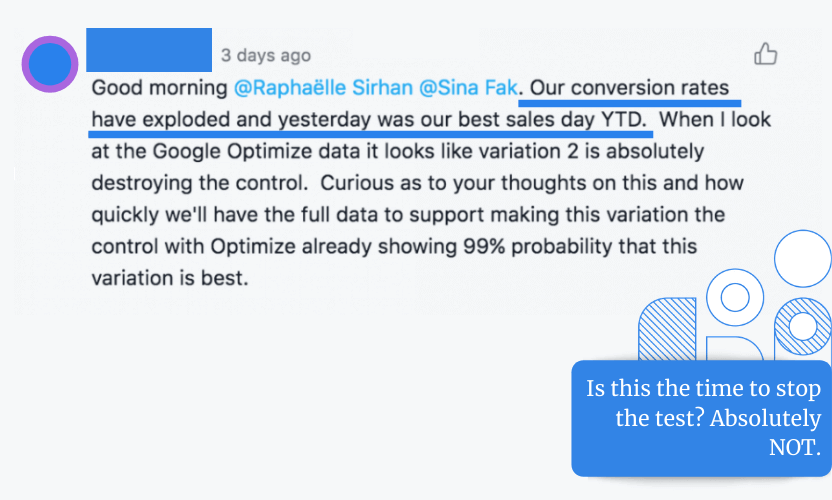

The other day we got this message from one of our clients in Asana.

Success! We’ve boosted conversion rates, job done. Is this the time to stop the test? Absolutely not.

Don’t get us wrong, we love it when we get “explosive” results for our clients immediately. It’s definitely a sign of something going right. But our primary objectives when testing are:

A) To learn more about why our hypothesis is working so that we can apply those learnings to future experiments.

B) To ensure the results we get are statistically significant.

Just because Google Optimize was showing 99% probability that a variation will win, that doesn’t necessarily mean that the test was significant.

When determining significance, it’s important to factor:

- The historical conversion rate,

- Your confidence interval (90% minimum),

- The Minimum Detectable Effect (MDE) you’re trying to measure.

All of these metrics will determine the amount of traffic you need before a test is truly significant. Ending the test too early or as soon as you start to see positive results will mean you miss out on all of this other information. Not only that, but you might actually be using unreliable information when moving forward into new tests.

Some Final Thoughts

It’s a fine line to walk when conducting A/B tests. Experimentation using this method can be a true goldmine for data and analytics, so it’s something you want to cache in on and get right as soon as you can. Remember though: unreliable data is just as bad as no data, so don’t rush into using test data until you’re confident in its quality.

To make sure your A/B tests run without a hitch, book a consultation with us to get started. Our years of experience will make sure you test the right elements for the right length of time – and get the exact data you need to bring your business to the next level.